By Cynthia Meyersohn

To close out this series of articles that have been focused on the data replication introduced by the processes outlined in “Build a Schema-On-Read Analytics Pipeline Using Amazon Athena”, by Ujjwal Ratan, Sep. 29, 2017, on the AWS Big Data Blog, https://aws.amazon.com/blogs/big-data/build-a-schema-on-read-analytics-pipeline-using-amazon-athena/ (Ratan, 2017), I will talk about an approach that I believe is effective in bringing the value that businesses are truly seeking from their investment in infrastructure and technologies used to deliver “Data Lake”-scale business intelligence.

As a reminder, I started this series to focus on data movement and replication processes associated with Mr. Ratan’s breakdown of the AWS Schema-on-Read analytics pipeline. My goal has been to answer the question, “Does the size of the data now being delivered to the business make a compelling argument that the data should not be moved around as much?”

Mr. Ratan uses a small (even tiny) dataset in his example, but in a Data Lake environment, companies are interested in loading terabytes, petabytes, or even larger sets of data. In reality, size or volume does matter a great deal, so one of our goals should be to reduce the number of times volumes of data are moved around or replicated.

Continued from The Zinger …

Diagram 4 below shows how, by following Steps 1 – 4 outlined in Mr. Ratan’s article, and covered in Parts 1 -3 of this series, you would end up with data being replicated ad nauseum throughout the S3 environment and persisted indefinitely in the S3 buckets. For all of the reasons previously stated, I am not a big fan of this approach. It seems that Diagram 4 is really aimed at justifying building a “Data Lake” on AWS S3 storage. What is really being built though is a one-way Data Swamp because there is no true architectural design for the proposed “Data Lake”. Anything approaching design has been left to the tools chosen in the author’s example.

Diagram 4 (Ratan, 2017)

Now, let’s compare Diagram 4 above to Diagram 5.

What Does Work?

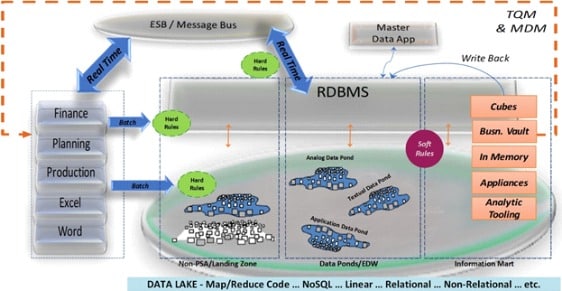

Diagram 5 illustrates a Data Vault 2.0 solution (Linstedt, Building a Scalable Data Warehouse with Data Vault 2.0, 2016) in a Data Lake environment (represented by a set of Data Ponds) envisioned and developed by Bill Inmon (Inmon, 2016) – two complimentary approaches that I believe do work and do have the best potential to bring the business the value it deserves.

Diagram 5 (Linstedt, 2018) (Inmon, 2016)

The DV2 architecture in Diagram 5 illustrates the ubiquitous nature of the solution and displays the placement of Bill Inmon’s Data Ponds within the architecture. Notice that there is no direct tie or reliance on a particular technology or database platform – it is not “tools-based”.

Note also that the architecture is designed to handle data ingests from traditional batch processes as well as real-time feeds from an enterprise service or messaging bus. The arrows between the traditional RDBMS and Data Lake environments indicate the data integration between the RDBMS and Data Lake technologies. (No, you don’t have to throw all of your legacy data into the Data Lake to integrate it … IF you have the right data warehousing solution.)

A few of the DV2 methodology and implementation components are highlighted in the diagram to appropriately allocate the application of hard business rules versus soft business rules, and to delineate where each type of rule is to be applied to the data. As part of the data engineering life-cycle, the diagram highlights the impact of managed self-service BI to the overall health and quality of the enterprise data through the use of TQM (Total Quality Management) and MDM (Master Data Management) feedback loops.

A critical feature of managed self-service BI shown in the diagram is the concept of write-back loops. Write-back loops enable the Enterprise Data Warehouse to capture the knowledge gained from the information enriched by business consumers and analysts, and write the enriched information back into the EDW. This preserves the knowledge and insights gained, making these assets available to the enterprise as a whole, encouraging information sharing.

In essence, a DV2 data warehousing implementation protects the “corporate memory” by preserving the raw data, integrating that data, delivering information to the business analysts, and capturing the knowledge and insights gained from the information analysis back into the EDW. And the DV2 architecture ensures that the business has the flexibility to scale its EDW without huge re-engineering efforts.

Compare the DV2 architecture in Diagram 5 to the Kimball-style and AWS architectures that Mr. Ratan based his article on and which are illustrated in Diagrams 6 and 7, respectively, provided below.

Diagram 6 (Ratan, 2017)

Diagram 7 (Ratan, 2017)

Diagram 6 implies that only relational databases comprise the foundation of a data warehouse while Diagram 7 shows all data sitting on AWS storage and being processed using AWS components. As an IT or non-technical manager or C-level executive both Diagrams 6 and 7 would lead you to conclude that a data warehouse is defined as data that is housed on some platform or another. Nothing could be further from the truth.

Bill Inmon, a.k.a., ”The Father of Data Warehousing”, defined a data warehouse in 1992 as, “a subject oriented, nonvolatile, integrated, time variant collection of data in support of management’s decisions”. Mr. Inmon recently updated his definition during his presentation at the World-Wide Data Vault Consortium. As of May 2018, he now defines a data warehouse as, “a subject-oriented, integrated (by business key), time-variant and non-volatile collection of data in support of management’s decision-making process, and in support of auditability as a system-of-record “.

The bottom line is that a data warehouse is not tied to any platform or technology. Nowhere in the definition of a data warehouse is there a stated technology, so why do so many people try to impose platform or technology constraints to the concept of a data warehouse?

Look again at Diagram 5. Ask yourself, do you see a technology-dependent or platform-dependent data warehouse architecture? No, you do not. The DV2 solution; i.e., the methodology, architecture, implementation, and model, is technology and platform agnostic.

Diagram 5 illustrates how these two solutions, a Data Vault 2.0 solution implemented on Bill Inmon’s concept of Data Ponds, will support the critical components that an architect needs to address when designing an analytical platform, a.k.a., an Enterprise Data Warehouse in a Data Lake.

The critical components I am referring to center on people, process, and technology – technology being but one component, enable the application of architectural discipline and methods such as data engineering, common business keys, agile software development, model design, data integration, maintenance, sustainability, data life-cycle, flexibility, scalability, repeatability, governance, auditability, security, pattern-based processes and structures, automation (pattern-based auto-code generation), and master data management. All of these methods should be included in the starting list of what a data architect must consider when designing an analytical platform.

Envisioning the Data Lake – The End Game

When it comes to envisioning a Data Lake, I clearly prefer Mr. Inmon’s concept of creating Data Ponds that address both the data and the processing. His approach is thoughtful, comes from one of the industry’s formidable thought-leaders, is backed by a wealth of experience and knowledge, and provides for all of the criteria that an architect should be designing to when it comes to analytical platforms … the criteria that is required for proper data engineering.

Mr. Inmon’s approach is sustainable, repeatable, pattern-based, auditable, allows for data governance, security, compliance, integration, and separation of hard and soft business rules … basically, it aligns perfectly with the Data Vault 2.0 solution in a Data Lake and supports my answer to this article’s title, “Does a Pattern-Based DW Architecture Still Hold Value Today?” Absolutely.

In fact, the Data Vault 2.0 is of more value in this “brave new world” of Big Data and Data Lakes than ever before. Why? Because the Data Vault 2.0 solution was engineered for the future, and the future is here. The Data Vault 2.0 solution addresses the three key components of any Enterprise Data Warehouse endeavor – people, process, and technology.

In Mr. Inmon’s book, Data Lake Architecture – Designing the Data Lake and Avoiding the Garbage Dump, he describes the premise for a Data Lake architecture that makes sense because it doesn’t leave the data as data, but rather allows for the data to be conditioned and turned into information. Interestingly, Mr. Inmon’s approach does not recommend a gigantic PSA. The data is not replicated and proliferated throughout the Data Lake … it is managed.

Mr. Inmon outlines why it is a better design to break the Data Lake into five specific data ponds – (1) a raw data pond (non-persistent staging area), (2) an analog data pond, (3) an application data pond, (4) a textual data pond, and (5) an archival data pond. His approach aligns with the long-recognized data life cycle process.

Inmon, 2016

Furthermore, and of significant importance, Mr. Inmon outlines the four basic ingredients that are needed to “turn the data lake into a bottomless well of actionable insights” (Inmon, 2016) … those ingredients are (1) metadata, (2) integration mapping, (3) context, and (4) metaprocess … all elements that the author completely missed as architectural criteria.

Each of these four ingredients is directly addressed by Dan Linstedt’s Data Vault 2.0 methodology and architecture. The DV2 methodology and architecture are optimized through the implementation of the model, regardless of whether the model is physical or logical.

As Mr. Inmon aptly points out in his book, “When only raw data is stored in the data lake, the analyst that needs to use that data is crippled” (Inmon, 2016) and furthermore, “raw data by itself just isn’t very useful.” (Inmon, 2016) Clearly, in order for raw data to have business value it must be turned into information through integration and the application of a variety of business rules.

Inmon, 2016

Some of those business rules are used to cleanse the data, others are used to aggregate and summarize the data, and still others are used to correlate the data. Some business rules are complex and others are not.

The AWS Athena approach discusses these factors as well, but in the example used, the data has been replicated multiple times and proliferated throughout the AWS S3 environment to arrive at a state where it provides limited business value by remaining an isolated dataset. In its current state, can it be integrated with the rest of the business’ data? Maybe … maybe not. Data integration does not just “happen”, it must be designed.

From both Mr. Inmon’s and Mr. Linstedt’s perspectives, delivering managed self-service business intelligence (BI) instead of self-service BI, positions the business to take immediate advantage of its enterprise information. Using managed self-service BI, the information is not just “fit” for discovery or experimentation by data scientists who are both expensive and hard to come by, but the information is conditioned in such a way that it has general usability (gee, what a concept!). The Data Vault 2.0 Information Delivery approach is focused on providing managed self-service business intelligence.

In other words, implementing the DV2 approach of managed self-service BI coupled with Bill Inmon’s vision of a series of data ponds means the data has been conditioned for use by anyone in the organization that needs it. Not only is it prepared for general usability but also because the metadata, metaprocesses, integration map, and context have been captured, it can be governed. In essence, the data is now suitable for governance, and governance provides consistency; and with consistency comes a degree of certainty that the business deserves from the investment that it has poured into its Data Lake technologies.

Another element of Mr. Inmon’s concept of Data Ponds is that he breaks data sets into three distinct categories – analog, application, and textual. The reason these are categorized in this fashion is to apply the right technology to the right dataset based on the right conditioning requirements as defined by the data set.

That means analog data, which is voluminous and also ‘noisy’, is conditioned according to its type so that it is reduced to the data that matters – cut through the noise, get to the chase. Application data, being traditionally more structured, is conditioned accordingly. Finally, textual data is conditioned and put into context (a critical factor of textual data) so that it can be adequately processed and correctly analyzed. Once data is deemed no longer relevant, it is moved to the Archival data pond and managed under the organization’s data life-cycle retirement policies.

Inmon, 2016

Applying the right technology to the right dataset based on the right conditioning requirements implies that this series of data ponds integrate to become a true data warehouse – a subject-oriented, integrated (by business key), time-variant and non-volatile collection of data in support of management’s decision-making process, and in support of auditability as a system-of-record.

The manner in which Mr. Inmon categorizes and divides the data into various ponds is not tied to any platform or technology, just as the Data Vault 2.0 solution is not tied to any platform or technology.

When it comes to addressing the issues surrounding Big Data that have to do with reducing both data movement and replication, the Data Vault 2.0 solution, coupled with a Data Pond implementation, helps to provide a solid, technical answer to the question, “Does the size of the data now being delivered to the business make a compelling argument that the data should not be moved around as much?”

I believe by tracing through the processes outlined in Mr. Ratan’s article, and counting the number of times data was moved and/or replicated, should be enough to convince anyone to take notice and rethink their approach to a Data Lake implementation. Imagine moving and replicating data that is 100’s, if not 1000’s, of times more voluminous using the approach outlined in Mr. Ratan’s article. How is a company supposed to (at a minimum) manage, govern, audit, secure, scale, or finance a Data Lake implementation that is based on pure tooling without true architectural design or engineering discipline? Such a notion is absurd.

Hence … my bold statement that it seems logical that the Data Vault 2.0 System of Business Intelligence is more relevant now that it ever has been … it is the future. In part because its creator, Dan Linstedt, saw the future from the past; and in part because I know that the Data Vault 2.0 solution will continue to grow and adapt to emerging technologies as it has been over the years.

How do I know this? Because Mr. Linstedt, the inventor and creator of the Data Vault 2.0 solution, is an innovator and a game changer.

Using Dan Linstedt’s Data Vault 2.0 System of Business Intelligence in conjunction with Bill Inmon’s concept of Data Ponds to include the four critical ingredients of a Data Lake – metadata, integration mapping, context, and metaprocess – will provide an enduring foundation for your Enterprise Data Warehouse; a solution that nearly eradicates both data replication and movement. The end result will be general usability of the enterprise’s data and the ability to generate consistent answers to the business’s questions through a governed, managed, auditable, secure, compliant Business Intelligence foundation.

For more information or to learn more about the engineering solutions presented in this article, I invite you to check out these links:

https://www.linkedin.com/company/forestrim/

Glossary of Terms

AWS (Amazon Web Services) – a secure cloud services platform, offering compute power, database storage, content delivery and other functionality to help businesses scale and grow. (AWS, 2018)

DV2 (Data Vault 2.0 System of Business Intelligence) – an approach to data warehousing originally invented by Daniel Linstedt in the 1990s as Data Vault 1.0, and enhanced into 2000 as Data Vault 2.0 to include engineering best practices in the realm of methodology, architecture, and implementation techniques through the model’s enabling neural network scalability.

ELT (Extract-Load-Transform) – an improved approach to data ingest whereby data is (1) taken from a source (extract), (2) stored after applying only simple business rules, a.k.a., hard business rules required to maintain the grain and metadata attributes of the original source data, into any number of target technologies (load), and (3) condition the raw data into business-focused information by applying any number of complex business rules to cleanse, standardize, aggregate, summarize, interpolate, and/or filter data for delivery to the end-user (transform).

ETL (Extract-Transform-Load) – a traditional approach to data ingest whereby data is (1) taken from a source (extract), (2) processed by applying a set of business rules, a.k.a., soft business rules, to cleanse, standardize, aggregate, summarize, interpolate, and/or filter data (transform), and (3) stored in its altered or modified state in any number of target technologies (load).

GDPR (General Data Protection Regulation) – The regulation covers what is considered personal data and the corporate responsibility for protecting or removing this data at an individual’s request. The definition of Personal Data has been provided directly from the EU Commission’s website.

“Personal data is any information that relates to an identified or identifiable living individual. Different pieces of information, which collected together can lead to the identification of a particular person, also constitute personal data.

Personal data that has been de-identified, encrypted or pseudoanonymized but can be used to re-identify a person remains personal data and falls within the scope of the law.

Personal data that has been rendered anonymous in such a way that the individual is not or no longer identifiable is no longer considered personal data. For data to be truly anonymized, the anonymization must be irreversible.

The law protects personal data regardless of the technology used for processing that data – it’s technology neutral and applies to both automated and manual processing, provided the data is organized in accordance with pre-defined criteria (for example alphabetical order). It also doesn’t matter how the data is stored – in an IT system, through video surveillance, or on paper; in all cases, personal data is subject to the protection requirements set out in the GDPR.” (Commission, n.d.)

PSA (Persistent Staging Area) – an area of storage space where data is written from a variety of sources, saved either indefinitely or for some pre-defined period of time, and potentially processed into other storage areas or output result sets. PSAs are commonly associated with an Hadoop Distributed File System (HDFS), but a PSA can exist on just about any platform or technical infrastructure.

S3 (Simple Storage Server) – an AWS storage offering that is generally less expensive than Amazon’s Elastic Block Store (EBS)/Elastic Compute Cloud (EC2) or Elastic File System (EFS). S3 is highly scalable and has an eleven nine’s (99.999999999%) level of durability.

About AWS Storage

I wanted to add a little “techie” stuff related to AWS storage since S3 is being referenced in the article. There are a number of AWS storage options available outside of S3. Each comes with its own price-point and capabilities that should be evaluated.

(https://www.cloudberrylab.com/blog/amazon-s3-vs-amazon-ebs/)

S3 storage is generally less expensive in AWS than Elastic Block Store (EBS)/Elastic Compute Cloud (EC2) or Elastic File System (EFS), it is highly scalable, and has an eleven nine’s level of durability (99.999999999%). That’s awesome. Each file may range in size from 0 to 5Tb which is probably suitable for most data sets. But what happens when it’s not? Well, the single data set gets split into multiple data files; but I can’t imagine trying to run a schema-on-read against multiple 5Tb files … partitioned or not.

What other storage choices does AWS offer?

EBS storage is currently provided through solid-state drives (SSD), must be used in combination with an EC2 service, and attached to an EC2 instance (mounted). It is not scalable as it mimics volumes of block storage and is provisioned based on a requested size. Additionally, you need to know the IOPS (input/output operations per second), a.k.a., the maximum number of read/write operations you need to perform per second in order to appropriately select one of the three different types of EBS volumes to attach to the EC2 instance. The options are:

- General Purpose (SSD) volumes that give a baseline performance of 3 IOPS/GB with an upper burst threshold of up to 10,000 IOPS. This type of volume is best for AWS databases like PostgreSQL, MS SQL, and Oracle.

- Provisioned IOPS (SSD) volumes that expand the bandwidth bottleneck and allow customers to buy read/write operations on demand regardless of volume capacity. While backed by the same SSD components, these volumes are designed for heavy workloads from 30 IOPS/Gb to 20,000 IOPS/Gb. These volumes can also be striped to ensure up to 48,000 IOPS or 800 Mbps throughput.

- Magnetic volumes are not SSD but rather based on magnetic hard disk drives (HDD). The IOPS is greatly reduced to hundred(s) of IOPS. This is cheap, slow storage designed for applications that don’t require many read/write operations.

EFS is Amazon’s Elastic File System and is designed for applications that need to handle high workloads, have a requirement for storage scalability with fast output (schema-on-write?). This type of storage can be accessed from various virtual machines (like servers) and network attached storage (NAS) devices.

References used throughout this series –

AWS. (2018, July 20). index. Retrieved from Amazon Web Services: https://aws.amazon.com/what-is-aws/

Cole, D. (2014). http://www.nybooks.com/daily/2014/05/10/we-kill-people-based-metadata/. Retrieved from http://www.nybooks.com: http://www.nybooks.com/daily/2014/05/10/we-kill-people-based-metadata/

Commission, E. (n.d.). https://ec.europa.eu/info/law/law-topic/data-protection/reform/what-personal-data_en. Retrieved from https://ec.europa.eu/info/index_en: https://ec.europa.eu/info/law/law-topic/data-protection/reform/what-personal-data_en

Ho. Jason Chaffetz, H. M. (2016). OPM Data Breach: How the Government Jeopardized Our National Security for More than a Generation. Washington D.C.: Committee on Oversight and Government Reform – U.S. House of Representatives – 114th Congress.

Inmon, W. (2016). Data Lake Architecture Designing the Data Lake and Avoiding the Garbage Dump. (R. A. Peters, Ed.) Basking Ridge, NJ, USA: Technics Publications.

Linstedt, D. (2016). Building a Scalable Data Warehouse with Data Vault 2.0. Waltham: Todd Green.

Linstedt, D. (2018, May). Data Vault 2.0 Architecture Diagram. Data Vault 2.0 Boot Camp & Private Certification.

Ratan, U. (2017, Sep 29). AWS Big Data Blog. Retrieved from https://aws.amazon.com: https://aws.amazon.com/blogs/big-data/build-a-schema-on-read-analytics-pipeline-using-amazon-athena/