There is a natural fit for AI, ML, DL and NLP in utilizing Data Vault models. In this entry we will explore the fundamentals of this hypothesis. This is not a finished work, but rather something you can consider, and possibly build upon. I’d also be interested in your feedback around where this theory falls down, and what you would change to make it better. I believe, that by leveraging the DVM we can in fact, improve AI, ML / DL components. More specifically neural networks with feedback loops (CNN – convolutional neural nets, or ANN – Artificial Neural Network)

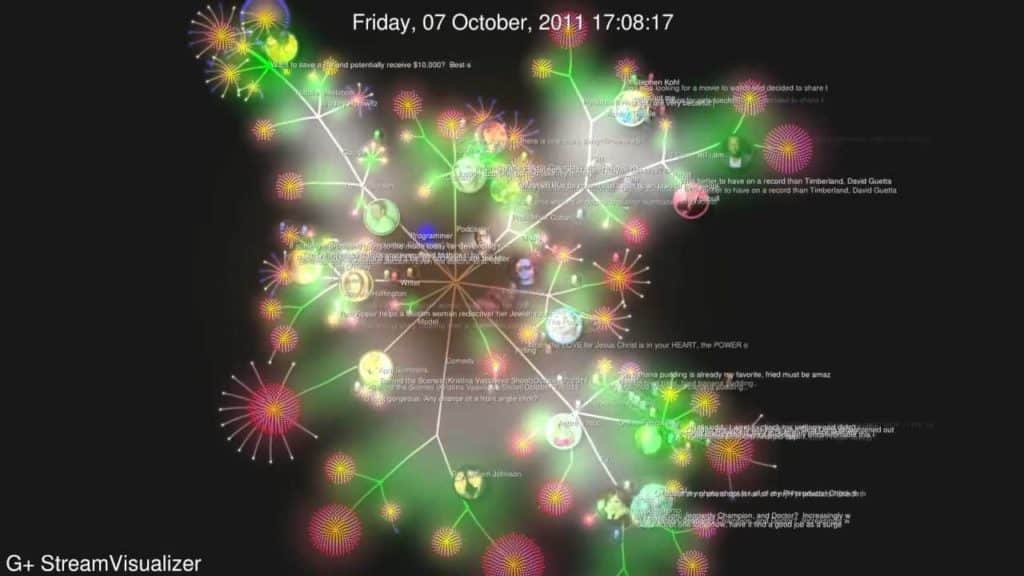

CREDIT FOR THE BLOG IMAGE:

I did not produce this image, this image is representative of what I want to produce by applying the theories below. The title image/blog image came from here: https://github.com/justinormont/Fastest-Force-Directed-Graph

First, the acronyms…

- AI = Artificial Intelligence

- ML = Machine Learning

- DL = Deep Learning

- NLP = Natural Language Processing

- DVM = Data Vault Model

Next, the assumptions:

- Text processing has already been addressed

- Speech processing has already been addressed

- leverage of existing Neural Network Technologies and knowledge of ANN and CNN’s applied

- Elements of document processing understood: stemming, word rooting, stop word lists, meaningful words, and word categorization (nouns, adjectives, etc…)

What is the problem we are trying to solve?

This blog is not trying to solve an entire problem, but rather address a one piece of the puzzle – improving our abilities to process text, documents, and speech, something to advance the study of NLP. Bringing the storage and algorithms together to improve the inference engine capabilities. It is my belief that no other “data modeling” paradigm offers such improved capabilties.

An introductory explanation…

Those of you that have taken a deep look at Data Vault Modeling or that have read my previous work, might understand that the model itself is based off of my theories as to what I believe the human brain is physically structured as. At least our understanding of the human brain (remember: the mind is different than the physical brain) I am only speaking of the physical brain here. In other words: neurons, dendrites, and synapses. I have long held a theory / opinion that we should be focusing on re-combining form with function. That by combining these two together would yield a sum of the whole, greater than the sum of the individual parts. I suppose, we need to narrow the focus even further, to the human memory components.

References: https://human-memory.net/brain-neurons-synapses/ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3367555/ and https://en.wikipedia.org/wiki/Neuronal_memory_allocation

What then, is NLP?

Neuro-Linguistic Programming – or Natural Language Processing, both ackronyms have been applied to processing natural language, or speech or the written word. http://www.nlp.com/what-is-nlp/ https://en.wikipedia.org/wiki/Neuro-linguistic_programming Our focus here, is: Natural Language Processing: https://en.wikipedia.org/wiki/Natural_language_processing

In a nutshell, this blog entry is not here to describe what CNN, ANN, or NLP are – but rather to break some ground on the hypothesis of how to apply and improve NLP by leveraging Data Vault Models and methods. I believe, that in order to derive meaning, content first has to be established, followed by associations of content, followed by context (organization in an ontology or taxonomy or both), followed by assignment / estimation of derived meaning. NLP or Natural Language Processing must first break down the constituent parts in to categorized semantic components. (this is all my hypothesis, so it very well could be wrong).

My hypothesis: Tying NLP to Data Vault Models and AI / ML / DL

I would argue that the following steps should be taken to achieve better / more accurate lines of success in NLP:

- Organize by Nouns – in the DVM context, this means identifying nouns as Hub objects, leveraging Semantic Meaning (same semantic meaning & matches) through utilization of taxonomies and ontologies. in other words: semantic peers. Nouns become the “business keys” or the key ideas, or subjects of the “singular thought”.

- Adjectives – assign adjectives as descriptors to the context, along with assigning a “time-line”. The temporality we know is not what would be assigned. No – the time-line in this case, as to do with the position & placement in the textual speech or document. Temporality may be considered T(zero) for the top of the document, or start of the speech, T+1 going forward, T+2, etc… Adjectives are assigned to Satellite components, along with additional scoring components (such as document location, sentence fragments, etc…)

- Multiple nouns in a sentence or thought – assigned to Links (associations), in this case, we also add strength and confidence ratings. These links or associations are established based on what we “grade/learn/know” to be associative ideas or contextually related ideas. They can be assigned through speech, or sentence co-locality, or can be inferred through leverage of a taxonomy or ontology which tells us “basically” what ideas are peers, what ideas are or should be related, which ideas are “parent / child” and so on. By adding strength, and confidence ratings (at a minimum) we can grade the respective relationships. In the ideas of the “mind”, my hypothesis is that assigning this context, can and often does, allow us to visualize: a) vectors, b) strength of relationship, c) quality of relationship – on a “record by record” or “idea by idea” basis. The stronger the relationship the “bigger” the connection.

When thinking this way, and assigning a form of deep learning or ML algorithm as the primary function, we can begin to extrapolate or infer which ideas are “stronger than others”, and which ideas are represented in documents, or speech. We can draw inferences at the end (again by leveraging the overall taxonomy or ontology as a domain guide). The inferences can vary depending on the level of the taxonomy we access. The strength and confidence of the inferences can also be projected, based on a number of mathematical algorithms (or neural models) to lend a % of correctness.

I believe that by processing natural language in this manner, we can begin to visualize in 3 dimensional space, just what content is being applied. The “center” of the model (in a 3d vector driven / defined space) is the most important component, and should be the “largest” central node in the network. The connections can be “flown through” and visualized, different filters for strength, and confidence can be applied to the visualization to show “ideas with different gradients of thought”.

Finally, the vector of distance and direction can be computed / inferred – by two factors: distance in the speech/document, measured against distance in the taxonomy (obviously both are biased, but you have to start somewhere), the second factor: direction, can be inferred by the language of reference. A noun that “will be defined later” is a forward / outgoing direction, where a noun or idea that references something previously discussed, is a reverse or incoming direction.

Wrapping this up…

Data Vault Models were built / meant to house data from within a neural network – a working neural network (more on this to come). Data Vault models (from day 1) were meant to be living, breathing and dynamically changing nodes. Data Vault models are the perfect place to store operational data (including a history of decisions made by a neural net). so that further analysis can reveal how the conclusions are reached! This may not be ground breaking news to some of you, but – right now in the AI/ML/DL industry, people are running around stating how they have NO CLUE how the neural net reached the decision it reached. But I believe we have an answer, right here – leveraging DV models (this is a topic for another blog entry).

As far as NLP / natural language processing and how Data Vaults play a role, I strongly believe that within a few weeks time (from the authoring of this blog post) that I can help anyone wanting to put this in to practice – that we can demonstrate a working model, and prove that these theories at least hold water as a starting point. I have built what I call a dynamic data warehouse in the past, that already leverages many of these techniques – although we did not use natural language / documents / text or speech as it’s input. I am positive that we can apply the same techniques to move the AI / ML / DL industry forward by leaps and bounds.

As I stated, visualizing the Data Vault Model in a 3 dimensional space (at that point) becomes very very easy. The visualizations can utilize force directed graphs with the metrics I have proposed, and leverage the filters on top to view NLP processed text in entirely new ways. AI predictive analysis leveraging these filters can then produce unexpected and startling outcomes, possibly revealing the unknown unknowns – which should be (truthfully) what AI is all about. Anyone game to work with me on this? Use the CONTACT US form here on the site to get in touch with me directly.